Convolutional Neural Network

Convolutional Neural Network

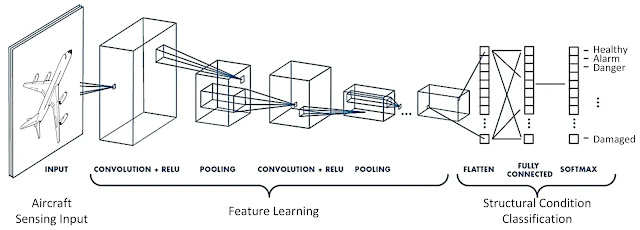

CNN/ConvNet is a Deep Learning algorithm, that deals with images, it takes an input image and assigns a learnable weight and bias values, and learns to differentiate with other images. Unlike other neural networks, the preprocessing required in ConvNet is smaller. In primitive methods filters are handed engineered, with enough training and ConvNets have the ability to learn them easily.

The Architecture of ConvNet is analogous to the human system of the visual cortex (connectivity pattern of neurons in the Human Brain).

Individual Neurons respond only to stimuli only the restricted region of the visual field. This is called the receptive field, and the Collection of such fields is called a visual area.

What is an Image?

An Image is nothing but a matrix of pixel values.

A ConvNet is able to successfully capture the Spatial and Temporal dependencies in an image through the application of relevant filters.

There are lots of color spaces like RGB, Grayscale, CMYK, HSV, etc. An RGB image that has been separated by its three color planes — Red, Green, and Blue. You may think, how large a dimension image can be compressed. But the role of ConvNet is to reduce the image into a form, which is easier to process, without losing the features, that are critical for good prediction.

Process of Operation:

- Input

- Convolution Operation

- Padding

- Pooling

- Classification

Convolutional Layer (Kernal Layer)

Image Dimensions = 5 (Height) x 5 (Breadth) x 1 (Number of channels, eg. RGB)Kernel Filter(K) is used to carrying out the convolution operation. This is the first part of the convolutional layer. Here the kernel is 3x3x1 matrix.

Kernal Matrix (k):

1 0 1

0 1 0

1 0 1

The filter moves to the right with Stride Value till the width. After, It moves down the image matrix with the same Stride Value and repeats the process until the image is traversed.

Convolution Operation

The object of convolution is to extract the high-level features from the image. But the ConvNet will not be having one ConvLayer, the first ConvLayer job is to extract the low-level features such as edges, color, etc.

Padding

Padding is the method of adding a zero layer on the boundary of the image to overcome the following issues.

- Shrinking outputs

- Losing information on corners of the image

The Convolved feature is reduced in dimension or, dimensions are either increased or remains the same. This can be done by the Same Padding or Valid Padding.

Same Padding

When we augment the 5x5x1 image into a 6x6x1 image and then apply the 3x3x1 kernel over it, we find that the convolved matrix turns out to be of dimensions 5x5x1. This is the same Padding.

Formula : n + 2p - f +1

where, n = image size, f = filter size, p = padding size

Valid Padding

Valid Padding means that no padding is done,i.e, the input dimension is the same as the result of output.

Formula : n - f + 1

where, n = image size, f = filter size

Pooling

The pooling layer is the next block of CNN. The aim of this layer is to reduce the spatial size of convolved feature. Doing this, will reduce the computational power and also will extract the most dominated features.

Two types of pooling:

1. Max Pooling

2. Average Pooling

Max & Average Pooling

Max Pooling returns the maximum value from the portion of the image covered by the Kernel. On the other hand, Average Pooling returns the average of all the values from the portion of the image covered by the Kernel.

Max Pooling also performs as a Noise Suppressant. It discards the noisy activations altogether and also performs de-noising along with dimensionality reduction. On the other hand, Average Pooling simply performs dimensionality reduction as a noise suppressing mechanism. Hence, we can say that Max Pooling performs a lot better than Average Pooling.

After going through the above process, we have successfully enabled the model to understand the features. Moving on, we are going to flatten the final output and feed it to a regular Neural Network for classification purposes.

Classification (FC Layer)

Adding a Fully-Connected layer is a (usually) cheap way of learning non-linear combinations of the high-level features as represented by the output of the convolutional layer. The Fully-Connected layer is learning a possibly non-linear function in that space. Now that we have converted our input image into a suitable form for our Multi-Level Perceptron, we shall flatten the image into a column vector. The flattened output is fed to a feed-forward neural network and backpropagation applied to every iteration of training. Over a series of epochs, the model is able to distinguish between dominating and certain low-level features in images and classify them using the Softmax Classification technique.

There are various architectures of CNNs available:

- LeNet

- AlexNet

- VGGNet

- GoogLeNet

- ResNet

- ZFNet

Comments

Post a Comment